Measurable attributes of AGI and promising benchmarks

miroojin / June 2023 (2096 Words, 12 Minutes)

Introduction

Large Language Models have taken the internet by storm and their magical capabilities have sparked a conversation about AGI and its near imminence. Many individuals believe that LLMs have the potential to pave the way for the development of systems with general intelligence, mostly because they have demonstrated a striking ability to perform a variety of complex cognitive tasks that were not even foreseen by their creators. Moreover, LLMs are capable of accomplishing these tasks with minimal or no training examples.

All the best examples of GPT-4, from OpenAI:

— Ben Tossell (@bentossell) March 15, 2023

This ability to be able to understand and perform a wide range of unforeseen tasks in different environments with minimal or no guidance might be one of the most important capabilities that can be engineered into AI systems. It is also the most common interpretation of general intelligence. But general intelligence is a really hard ability to measure. As the developer of an AI system, you cannot predict your system’s performance on unfamiliar tasks in unknown environments using the conventional black box testing approaches, commonly used in AI model evaluation. As a shallow proxy, we base our assessments on the system’s ability to do things that are cognitively challenging for humans. But this approach has been infamously misleading, primarily due to our anthropomorphic bias. What is challenging for us might not be challenging for machines. Achieving impressive results on cherry-picked tasks is no guarantee of an agent possessing human-level general intelligence. LLMs serve as the most recent testament to this principle.

The potential of general-purpose systems is virtually limitless, they possess capabilities that extend beyond their original intended applications. Human beings are prime examples of systems exhibiting general-purpose intelligence. It is therefore understandable why many individuals and organisations aspire to develop AI systems that can match the level of general intelligence observed in humans. But before we make any progress towards developing these systems, we need to have a clear and precise understanding of general intelligence so that we know what we’re working towards and, more importantly, come up with a comprehensive and reliable set of benchmarks and tests that can measure general intelligence in AI systems.

What, exactly, are we trying to measure?

“Viewed narrowly, there seem to be almost as many definitions of intelligence as there were experts asked to define it.” - Robert Sternberg

The definition of general intelligence is as elusive as its conception. The only point of reference we have for general intelligence is the observed manifestation of biological intelligence in humans and animals, which itself is hard to qualify. We seem to possess an intuitive notion of what intelligent behavior looks like while watching our favorite detective show or raising a child, but when pressed for a precise definition, we find ourselves at a loss for words. This difficulty arises from the fact that general intelligence is not an inherent, standalone property of systems; rather, it emerges from intricate interactions among diverse cognitive processes, neural mechanisms, and the environment. Trying to understand and describe this soup of complexity is a highly ambitious endeavor, particularly considering our current limited understanding of biological intelligence.

A more practical approach would be to try and describe general intelligence as a collection of different emergent capabilities that turn out to be really useful for solving problems in complex environments. I’m going to try and narrow down on capabilities that are both broadly applicable across different problem domains and can be adequately quantified.

General intelligence abilities

Task versatility is the ability to perform effectively on a wide range of different tasks. The ability can be evaluated by testing the system on a diverse set of benchmarks that cover various problem domains.

Learning efficiency is the ability to learn effectively and rapidly from a given set of data or experiences. It measures how well the system can acquire knowledge or skills with minimal resources, such as training data, computational power, or time.

Transfer learning is the ability to efficiently reuse prior knowledge, aiming to reduce the amount of training data, computational power or time required for learning new tasks.

Adaptability is a system's ability to modify its behavior and enhance its performance in response to new data or changing situations. One way to evaluate adaptability is by comparing a system's performance before and after exposure to new data or changing environments.

It’s pretty evident that humans are great at all of these abilities. We should strive to create systems that can match a human’s performance along these dimensions.

Interesting approaches

Given that we have recently started transitioning from narrow AI to more general AI systems, there hasn’t been a lot of emphasis on benchmarking general AI till now. Nevertheless, a few interesting approaches have surfaced, which are worth exploring.

The Abstraction and Reasoning Corpus (ARC)

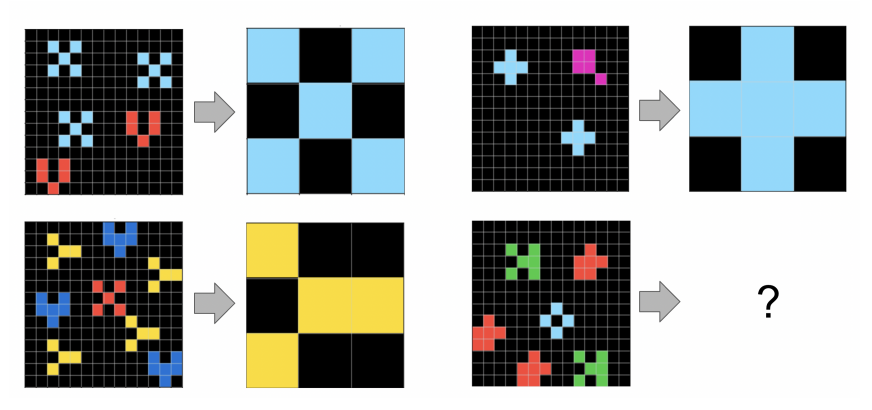

The ARC dataset, created by Francois Chollet, is a set of tasks that are intended to serve as a benchmark for measuring learning efficiency. The task format is very simple: There are one or more sample demonstrations in which a grid of colored pixels is transformed into a new grid. The agent has to infer the abstract transformation rule from the demonstrations and apply it to a test input grid.

Each task contains very few sample demonstrations, which means the agent has to learn the grid transformation rule by looking at a few examples only. According to Chollet, this ability to learn to solve complex tasks from a few demonstrations is a key feature of general intelligence. Since the ARC dataset is fully solvable by humans, Chollet claims that any agent that is able to achieve human-level performance on the benchmark should be able to perform a wide range of tasks of a kind that would normally require human-like fluid intelligence.

The Hutter Prize

The Hutter Prize is a cash prize funded by Marcus Hutter, which rewards data compression improvements on a 1GB Wikipedia dataset to encourage research in AI. Hutter believes that a good compressor will intelligently have to find regularities in data which is an intrinsically hard problem.

According to Hutter, for an agent to make optimal predictions in any environment given a set of prior experiences to learn from, it needs to model (compress) the experiences into the smallest possible summary. This principle is akin to Occam’s razor which is a problem-solving guideline that recommends selecting the simplest possible explanation for any phenomenon.

Hutter also claims that the best text compression algorithms would be the ones that understand the text in the same way that humans would. The better you understand the data, the more you can delete pieces from it during compression and reconstruct the missing pieces during decompression.

For example, consider the missing words in the following sentence:

Deep learning [1] a subfield of [2] learning that involves the use [3] artificial neural [4] to model and understand complex patterns and representations.

Someone who understands English can easily guess that [1] = is and [3] = of. Furthermore, an expert on AI would guess that [2] = machine and [4] = networks. This shows that the better you understand the text, the more you can delete pieces from it without loss. If a compression algorithm matches the reconstruction capabilities of a human, it should be regarded as having the same understanding.

Simulated environments

Simulated environments have been the de-facto way of testing reinforcement learning algorithms. People have come up with different plans for measuring the generalization power of RL agents in simulated environments - by procedurally introducing stochasticity, evaluating across a diverse range of environments, and modeling environments around real-world domains.

Crowd-sourced benchmarks

When it comes to quantitatively measuring the generalization power of AI systems, the ability to handle unforeseen tasks becomes a crucial aspect to evaluate. Traditional AI benchmarks, which typically focus on evaluating performance within a static set of skills, are not of much use in this regard.

A better alternative would be crowd-sourced benchmarks such as BIG Bench and OpenAI’s evals, which are more diverse and dynamic in nature compared to traditional benchmarks. These benchmarks introduce a wide range of failure modes that challenge the system’s adaptability.

A key factor for the success of crowd-sourced benchmarks is that they should have enough people contributing to them regularly with a stream of diverse and high-quality tasks.

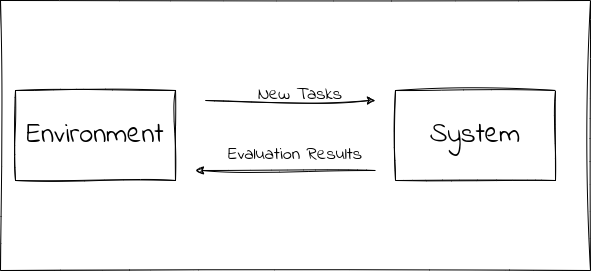

Evolving learning environments

One way of introducing novel tasks for a system to solve is to design dynamic learning environments and problem domains that evolve along with the the capabilities of the system. These environments and domains continuously generate new tasks of increasing difficulty and complexity, enabling the system to progressively enhance its problem-solving skills. This continual co-evolution of the environment complexity and system creates an open-ended learning process which can lead to surprising developments, much like how Darwinian evolution fostered the development of biological intelligence.

Various approaches have been explored to foster task evolution in a useful way including evolutionary algorithms, Generative Adversarial Networks, and hand-crafted heuristics.

Good old Turing Test

The Turing test, one of the earliest experiments devised to evaluate an agent’s capacity to exhibit human-level intelligence, while being deceptively straightforward can still be a good test if conducted in the right way.

The premise of the test is as follows - There is a human judge who talks to a machine and a human without knowing which one is which. The judge can ask them questions or have conversations with them through a computer or other communication methods. If the judge cannot tell which one is the machine and which one is the human based on their responses, then the machine is considered to have passed the test.

A comprehensive version of the test, conceived out of a bet between Mitchell Kapor and Ray Kurzweil which involves three judges, three human participants along with the machine, and 24 hours of interviewing, is a good example of how the test should be conducted.

Conclusion

While none of the approaches listed above offer definitive solutions for validating the existence of human-level general intelligence in AI systems, they do propose interesting alternatives to the common pitfall of relying solely on the performance of systems on specific, intellectually demanding tasks. As we continue to deploy increasingly capable AI systems to assist our civilization in various domains, we will see many more such benchmarks being developed, reflecting the the evolving nature of our understanding and assessment of general intelligence.

Over the past few decades, there have been a few highly publicized encounters between AI systems and human experts in board games such as Chess and Go, as well as video games like Dota 2 and Starcraft. Despite these remarkable advancements, these systems still fall short when it comes to matching the generalization capabilities exhibited by humans.

François Chollet is a artificial intelligence researcher currently working at Google. Chollet is the creator of the Keras deep-learning library, released in 2015, and a main contributor to the TensorFlow machine learning framework.

Marcus Hutter is DeepMind Senior Scientist researching the mathematical foundations of artificial general intelligence.

This hypothesis is based on the Minimum Description Length principle which states that the best explanation, given a limited set of observed data, is the one that permits the greatest compression of the data.

Examples:

Examples:

Examples:

Examples:

Examples:

Examples:

In 2002, Ray Kurzweil and Mitchell Kapor made a bet about whether a computer would pass the Turing Test in the near future. Kurzweil believed that a computer would pass the Turing Test by 2029, while Kapor believed that it would not happen. The terms of the bet can be found here.